Microsoft AZ-204 Dumps [2022] to help you pass the Developing Solutions for Microsoft Azure exam

Why can an AZ-204 dumps help you pass the Developing Solutions for Microsoft Azure Exam?

First, the AZ-204 Dumps Questions are exam questions designed by experienced certified experts based on the Core Content of Developing Solutions for Microsoft Azure. Contains all of the following important core skills:

Develop Azure compute solutions

Develop for Azure storage

Implement Azure security

Monitor, troubleshoot, and optimize Azure solutions

Connect to and consume Azure services and third-party services

Secondly, the latest update of AZ-204 dumps questions, including 347 exam questions and answers, is guaranteed to be effective immediately.

Finally, whether you have experience or not, using leads4pass AZ-204 Dumps Questions comes with both PDF and VCE learning modes, which are easy to learn, fast, and effective, and help you easily pass the Developing Solutions for Microsoft Azure certification exam.

and you can first detect the AZ-204 free dumps questions below

Question 1:

You need to secure the Shipping Logic App. What should you use?

A. Azure App Service Environment (ASE)

B. Integration Service Environment (ISE)

C. VNet service endpoint

D. Azure AD B2B integration

Correct Answer: B

Scenario: The Shipping Logic App requires secure resources for the corporate VNet and uses dedicated storage resources with a fixed costing model.

You can access Azure Virtual Network resources from Azure Logic Apps by using integration service environments (ISEs).

Sometimes, your logic apps and integration accounts need access to secured resources, such as virtual machines (VMs) and other systems or services, that are inside an Azure virtual network. To set up this access, you can create an

integrated service environment (ISE) where you can run your logic apps and create your integration accounts. Reference: https://docs.microsoft.com/en-us/azure/logic-apps/connect-virtual-network-vnet-isolated-environment-overview

Question 2:

You need to configure the ContentUploadService deployment.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Add the following markup to line CS23: types: Private

B. Add the following markup to line CS24: osType: Windows

C. Add the following markup to line CS24: osType: Linux

D. Add the following markup to line CS23: types: Public

Correct Answer: A

Scenario: All Internal services must only be accessible from Internal Virtual Networks (VNets)

There are three Network Location types – Private, Public, and Domain

Reference:https://devblogs.microsoft.com/powershell/setting-network-location-to-private/

Question 3:

You need to investigate the HTTP server log output to resolve the issue with the ContentUploadService. Which command should you use first?

A. az webapp log

B. az ams live-output

C. az monitor activity-log

D. az container attach

Correct Answer: C

Scenario: Users of the ContentUploadService report that they occasionally see HTTP 502 responses on specific pages.

“502 bad gateway” and “503 services unavailable” are common errors in your app hosted in Azure App Service.

Microsoft Azure publicizes each time there is a service interruption or performance degradation.

The az monitor activity-log command manages activity logs.

Note: Troubleshooting can be divided into three distinct tasks, in sequential order:

1.

Observe and monitor application behavior

2.

Collect data

3.

Mitigate the issue

Reference: https://docs.microsoft.com/en-us/cli/azure/monitor/activity-log

Question 4:

You need to monitor ContentUploadService according to the requirements. Which command should you use?

A. az monitor metrics alert create –n alert –g … – -scopes … – -condition “avg Percentage CPU > 8”

B. az monitor metrics alert create –n alert –g … – -scopes … – -condition “avg Percentage CPU > 800”

C. az monitor metrics alert create –n alert –g … – -scopes … – -condition “CPU Usage > 800”

D. az monitor metrics alert create –n alert –g … – -scopes … – -condition “CPU Usage > 8”

Correct Answer: B

Scenario: An alert must be raised if the ContentUploadService uses more than 80 percent of available CPU-cores

Reference: https://docs.microsoft.com/sv-se/cli/azure/monitor/metrics/alert

Question 5:

You need to authenticate the user to the corporate website as indicated by the architectural diagram. Which two values should you use? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

A. ID token signature

B. ID token claims

C. HTTP response code

D. Azure AD endpoint URI

E. Azure AD tenant ID

Correct Answer: AD

A: Claims in access tokens

JWTs (JSON Web Tokens) are split into three pieces:

1.

Header – Provides information about how to validate the token including information about the type of token and how it was signed.

2.

Payload – This contains all of the important data about the user or app that is attempting to call your service.

3.

A signature – Is the raw material used to validate the token.

E: Your client can get an access token from either the v1.0 endpoint or the v2.0 endpoint using a variety of protocols.

Scenario: User authentication (see step 5 below)

The following steps detail the user authentication process:

1.

The user selects Sign in on the website.

2.

The browser redirects the user to the Azure Active Directory (Azure AD) sign-in page.

3.

The user signs in.

4.

Azure AD redirects the user\’s session back to the web application. The URL includes an access token.

5.

The web application calls an API and includes the access token in the authentication header. The application ID is sent as the audience (‘AUD) claim in the access token.

6.

The back-end API validates the access token.

Reference: https://docs.microsoft.com/en-us/azure/api-management/api-management-access-restriction-policies

Question 6:

You need to ensure that all messages from Azure Event Grid are processed. What should you use?

A. Azure Event Grid topic

B. Azure Service Bus topic

C. Azure Service Bus queue

D. Azure Storage queue

E. Azure Logic App custom connector

Correct Answer: C

As a solution architect/developer, you should consider using Service Bus queues when:

Your solution needs to receive messages without having to poll the queue. With Service Bus, you can achieve it by using a long-polling receive operation using the TCP-based protocols that Service Bus supports.

Reference:

https://docs.microsoft.com/en-us/azure/service-bus-messaging/service-bus-azure-and-service-bus-queues-compared-contrasted

Question 7:

You need to ensure that the solution can meet the scaling requirements for Policy Service. Which Azure Application Insights data model should you use?

A. an Application Insights dependency

B. an Application Insights event

C. an Application Insights trace

D. an Application Insights metric

Correct Answer: D

Application Insights provides three additional data types for custom telemetry:

Trace – used either directly, or through an adapter to implement diagnostics logging using an instrumentation framework that is familiar to you, such as Log4Net or System. Diagnostics.

Event – typically used to capture user interaction with your service, and to analyze usage patterns.

Metric – used to report periodic scalar measurements.

Scenario:

Policy service must use Application Insights to automatically scale with the number of policy actions that it is performing.

Reference:

https://docs.microsoft.com/en-us/azure/azure-monitor/app/data-model

Question 8:

You need to resolve a notification latency issue.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Set Always On to true.

B. Ensure that the Azure Function is using an App Service plan.

C. Set Always On to false.

D. Ensure that the Azure Function is set to use a consumption plan.

Correct Answer: AB

Azure Functions can run on either a Consumption Plan or a dedicated App Service Plan. If you run in a dedicated mode, you need to turn on the Always On setting for your Function App to run properly. The Function runtime will go idle after a few minutes of inactivity, so only HTTP triggers will actually “wake up” your functions. This is similar to how WebJobs must have Always On enabled.

Scenario: Notification latency: Users report that anomaly detection emails can sometimes arrive several minutes after an anomaly is detected.

Anomaly detection service: You have an anomaly detection service that analyzes log information for anomalies. It is implemented as an Azure Machine Learning model. The model is deployed as a web service. If an anomaly is detected, an Azure Function that emails administrators is called by using an HTTP WebHook.

Question 9:

You need to ensure receipt processing occurs correctly. What should you do?

A. Use blob properties to prevent concurrency problems

B. Use blob SnapshotTime to prevent concurrency problems

C. Use blob metadata to prevent concurrency problems

D. Use blob leases to prevent concurrency problems

Correct Answer: B

You can create a snapshot of a blob. A snapshot is a read-only version of a blob that\’s taken at a point in time. Once a snapshot has been created, it can be read, copied, or deleted, but not modified. Snapshots provide a way to back up a blob as it appears at a moment in time.

Scenario: Processing is performed by an Azure Function that uses version 2 of the Azure Function runtime. Once processing is completed, results are stored in Azure Blob Storage and an Azure SQL database. Then, an email summary is sent to the user with a link to the processing report. The link to the report must remain valid if the email is forwarded to another user.

Reference: https://docs.microsoft.com/en-us/rest/api/storageservices/creating-a-snapshot-of-a-blob

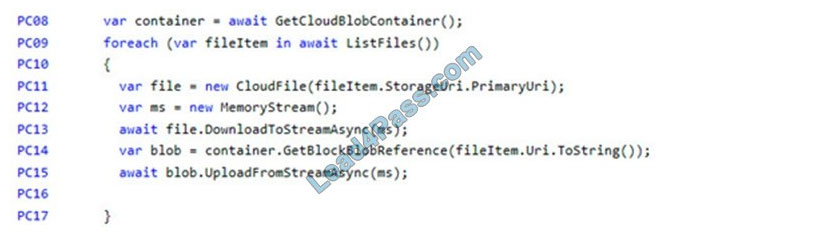

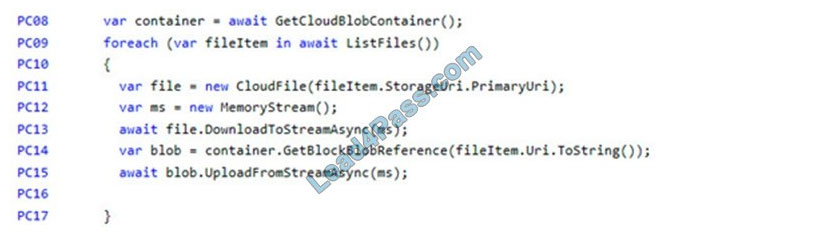

Question 10:

You need to resolve the capacity issue. What should you do?

A. Convert the trigger on the Azure Function to an Azure Blob storage trigger

B. Ensure that the consumption plan is configured correctly to allow scaling

C. Move the Azure Function to a dedicated App Service Plan

D. Update the loop starting on line PC09 to process items in parallel

Correct Answer: D

If you want to read the files in parallel, you cannot use forEach. Each of the async callback function calls does return a promise. You can await the array of promises that you\’ll get with Promise. all. Scenario: Capacity issue: During busy periods, employees report long delays between the time they upload the receipt and when it appears in the web application.

Reference: https://stackoverflow.com/questions/37576685/using-async-await-with-a-foreach-loop

Question 11:

You need to resolve the log capacity issue. What should you do?

A. Create an Application Insights Telemetry Filter

B. Change the minimum log level in the host.json file for the function

C. Implement Application Insights Sampling

D. Set a LogCategoryFilter during startup

Correct Answer: C

Scenario, the log capacity issue: Developers report that the number of log messages in the trace output for the processor is too high, resulting in lost log messages.

Sampling is a feature in Azure Application Insights. It is the recommended way to reduce telemetry traffic and storage while preserving a statistically correct analysis of application data. The filter selects items that are related so that you can

navigate between items when you are doing diagnostic investigations. When metric counts are presented to you in the portal, they are renormalized to take account of the sampling, to minimize any effect on the statistics.

Sampling reduces traffic and data costs and helps you avoid throttling.

Reference:

https://docs.microsoft.com/en-us/azure/azure-monitor/app/sampling

Question 12:

You need to ensure the security policies are met.

What code do you add at line CS07 of ConfigureSSE.ps1?

A. -PermissionsToKeys create, encrypt, decrypt

B. -PermissionsToCertificates create, encrypt, decrypt

C. -PermissionsToCertificates wrap key, unwrap key, get

D. -PermissionsToKeys wrap key, unwrap key, get

Correct Answer: B

Scenario: All certificates and secrets used to secure data must be stored in Azure Key Vault.

You must adhere to the principle of least privilege and provide privileges that are essential to perform the intended function.

The Set-AzureRmKeyValutAccessPolicy parameter -PermissionsToKeys specifies an array of key operation permissions to grant to a user or service principal. The acceptable values for this parameter: decrypt, encrypt, unwrapKey, wrap key,

verify, sign, get, list, update, create, import, delete, backup, restore, recover, purge

Incorrect Answers:

A, C: The Set-AzureRmKeyValutAccessPolicy parameter -PermissionsToCertificates specifies an array of certificate permissions to grant to a user or service principal. The acceptable values for this parameter: get, list, delete, create, import,

update, managecontacts, getissuers, listissuers, setissuers, deleteissuers, manageissuers, recover, purge, backup, restore

Reference:

https://docs.microsoft.com/en-us/powershell/module/azurerm.keyvault/set-azurermkeyvaultaccesspolicy

Question 13:

You are writing code to create and run an Azure Batch job.

You have created a pool of compute nodes.

You need to choose the right class and its method to submit a batch job to the Batch service.

Which method should you use?

A. JobOperations.EnableJobAsync(String, IEnumerable,CancellationToken)

B. JobOperations.CreateJob()

C. CloudJob.Enable(IEnumerable)

D. JobOperations.EnableJob(String, IEnumerable)

E. CloudJob.CommitAsync(IEnumerable, CancellationToken)

Correct Answer: E

A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool.

The Commit method submits the job to the Batch service. Initially, the job has no tasks.

{

CloudJob job = batchClient.JobOperations.CreateJob();

job.Id = JobId;

job.PoolInformation = new PoolInformation { PoolId = PoolId };

job.Commit();

}

…

References:

https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnet

Question 14:

You are developing a software solution for an autonomous transportation system. The solution uses large data sets and Azure Batch processing to simulate navigation sets for entire fleets of vehicles.

You need to create compute nodes for the solution on Azure Batch.

What should you do?

A. In the Azure portal, add a Job to a Batch account.

B. In a .NET method, call the method: BatchClient.PoolOperations.CreateJob

C. In Python, implement the class: JobAddParameter

D. In Azure CLI, run the command: az batch pool create

Correct Answer: B

A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool.

Note:

Step 1: Create a pool of compute nodes. When you create a pool, you specify the number of compute nodes for the pool, their size, and the operating system. When each task in your job runs, it\’s assigned to execute on one of the nodes in

your pool.

Step 2: Create a job. A job manages a collection of tasks. You associate each job to a specific pool where that job\’s tasks will run. Step 3: Add tasks to the job. Each task runs the application or script that you uploaded to process the data

files it downloads from your Storage account. As each task completes, it can upload its output to Azure Storage.

Incorrect Answers:

C: To create a Batch pool in Python, the app uses the PoolAddParameter class to set the number of nodes, VM size, and a pool configuration.

References: https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnet

Question 15:

You are developing a software solution for an autonomous transportation system. The solution uses large data sets and Azure Batch processing to simulate navigation sets for entire fleets of vehicles.

You need to create compute nodes for the solution on Azure Batch.

What should you do?

A. In the Azure portal, create a Batch account.

B. In a .NET method, call the method: BatchClient.PoolOperations.CreatePool

C. In Python, implement the class: JobAddParameter

D. In Python, implement the class: TaskAddParameter

Correct Answer: B

A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool.

Incorrect Answers:

C, D: To create a Batch pool in Python, the app uses the PoolAddParameter class to set the number of nodes, VM size, and a pool configuration.

References:

https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnet https://docs.microsoft.com/en-us/azure/batch/quick-run-python

……

Use https://www.leads4pass.com/az-204.html to get Microsoft AZ-204 dumps questions as preparation material, which covers the objectives of the Developing Solutions for Microsoft Azure exam and guarantees you a high score on the exam.

You may also like

Recent Posts

Categories

Microsoft Exam Dumps PDF Download

Microsoft Azure Exam PDF Free Download

- Microsoft az-104 PDF Free Download

- Microsoft az-120 PDF Free Download

- Microsoft az-140 PDF Free Download

- Microsoft az-204 PDF Free Download

- Microsoft az-220 PDF Free Download

- Microsoft az-305 PDF Free Download

- Microsoft az-400 PDF Free Download

- Microsoft az-500 PDF Free Download

- Microsoft az-700 PDF Free Download

- Microsoft az-800 PDF Free Download

- Microsoft az-801 PDF Free Download

Microsoft Data Exam PDF Free Download

- Microsoft AI-102 PDF Free Download

- Microsoft DP-100 PDF Free Download

- Microsoft DP-203 PDF Free Download

- Microsoft DP-300 PDF Free Download

- Microsoft DP-420 PDF Free Download

- Microsoft DP-600 PDF Free Download

Microsoft Dynamics 365 Exam PDF Free Download

- Microsoft MB-230 PDF Free Download

- Microsoft MB-240 PDF Free Download

- Microsoft MB-310 PDF Free Download

- Microsoft MB-330 PDF Free Download

- Microsoft MB-335 PDF Free Download

- Microsoft MB-500 PDF Free Download

- Microsoft MB-700 PDF Free Download

- Microsoft MB-800 PDF Free Download

- Microsoft MB-820 PDF Free Download

- Microsoft pl-100 PDF Free Download

- Microsoft pl-200 PDF Free Download

- Microsoft pl-300 PDF Free Download

- Microsoft pl-400 PDF Free Download

- Microsoft pl-500 PDF Free Download

- Microsoft pl-600 PDF Free Download

Microsoft 365 Exam PDF Free Download

- Microsoft MD-102 PDF Free Download

- Microsoft MS-102 PDF Free Download

- Microsoft MS-203 PDF Free Download

- Microsoft MS-700 PDF Free Download

- Microsoft MS-721 PDF Free Download

Microsoft Fundamentals Exam PDF Free Download

- Microsoft 62-193 PDF Free Download

- Microsoft az-900 PDF Free Download

- Microsoft ai-900 PDF Free Download

- Microsoft DP-900 PDF Free Download

- Microsoft MB-901 PDF Free Download

- Microsoft MB-910 PDF Free Download

- Microsoft MB-920 PDF Free Download

- Microsoft pl-900 PDF Free Download

- Microsoft MS-900 PDF Free Download

Microsoft Certified Exam PDF Free Download

Recent Comments